Casual Inference in a nutshell

Logistic optimization: Delivery drivers’ location optimization using casual inference for GOKADA.

INTRODUCTION

Our client is Gokada — the largest last mile delivery service in Nigeria. Gokada works is partnered with motorbike owners and drivers to deliver parcels across Lagos, Nigeria. Gokada has completed more than a million deliveries in less than a year, with a fleet of over 1200 riders. One key issue Gokada has faced as it expands its service is the sub-optimal placement of pilots (Gokada calls their motor drivers pilots) and clients who want to use Gokada to send their parcel. This has led to a high number of unfulfilled delivery requests.

This project aims to solve this problem by working on the company’s data to help it understand the primary causes of unfulfilled requests as well as come up with solutions that recommend drivers locations that increase the fraction of complete orders. Since drivers are paid based on the number of requests they accept, your solution will help Gokada business grow both in terms of client satisfaction and increased business.

OBJECTIVE OF THE PROJECT

Applying casual inference to optimize drivers whereabouts upon customer orders, so that the company can best utilize its pilots’ locations to the maximum.

LITERATURE REVIEW

Most studies in the health, social and behavioral sciences aim to answer causal questions. Such questions require some knowledge of the data-generating process, and cannot be computed from the data alone, nor from the distributions that govern the data. Remarkably, although much of the conceptual framework and algorithmic tools needed for tackling such problems are now well established, they are not known to many of the researchers who could put them into practical use.

According to a recent paper that summarizes the state-of-the-art in the field of how convenient access to copious data impacts our ability to learn causal effects and relations, Causality is a generic relationship between an effect and the cause that gives rise to it. It is hard to define, and we often only know intuitively about causes and effects. It states the following propositions to further illustrate the idea. Because it rained, the streets were wet. Because the student did not study, he did poorly on the exam. Because the oven was hot, the cheese melted on the pizza. When it comes to learning causality with data, we need to be aware of the differences between statistical associations and causations.

Judea pearl, on his review titled “casual inference in statistics” states that the questions that motivate most studies in the health, social and behavioral sciences are not associational but causal in nature. He tries to support the core idea with the following examples. What is the efficacy of a given drug in a given population? Whether data can prove an employer guilty of hiring discrimination? What fraction of past crimes could have been avoided by a given policy? What was the cause of death of a given individual, in a specific incident? These are causal questions because they require some knowledge of the data-generating process; they cannot be computed from the data alone, nor from the distributions that govern the data.

Traditional statistics is strong in devising ways of describing data and inferring distributional parameters from sample. Causal inference requires two additional ingredients: a science-friendly language for articulating causal knowledge, and a mathematical machinery for processing that knowledge, combining it with data and drawing new causal conclusions about a phenomenon.

DATA

There are two datasets available for this project. The first one contains information about the completed orders. The second set contains delivery requests by clients (completed and unfulfilled).

By merging (inner joining) the above tables we can find an information about a trip including a driver’s whereabouts during the ordering of that specific order. To make together the two datasets we used the (trip.Trip ID) attribute which refers to driverLocation.order_id feature in the driver_location_during_request data frame.

EXPLORATORY DATA ANALYSIS

- The fulfillment of a trip, which was interpreted as an accepted order with a completion time less than 30 mins is about 30%.

- The separation distance between a trip’s origin and the candidate drivers position is accumulated around zero kms.

METHODOLOGY

There are key questions to be answered to successfully solve the logistic optimization problem as “what could happen if drivers were 1km away from where they are now?”. Judea Pearl and his research group have developed in the last decades a solid theoretical framework called Causal Inference to deal with these types of “What if’’ and “What would have been” questions. There is a number of active research in integrating causal inference with the mainstream machine learning techniques.

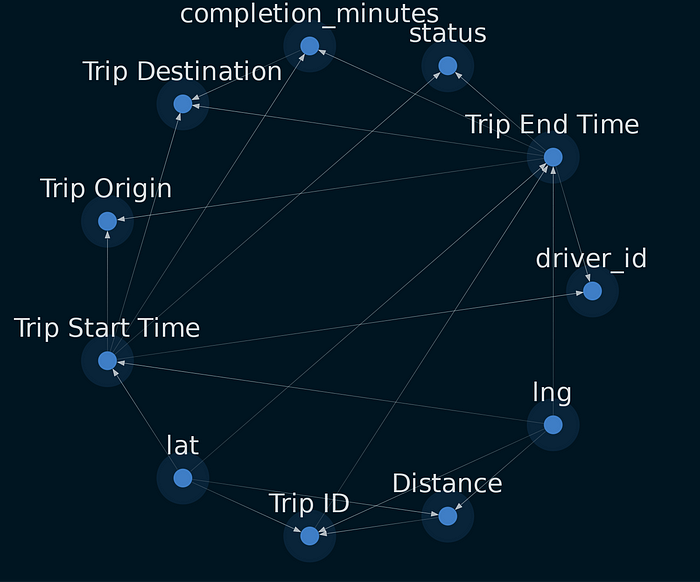

The causal graph (or the DAG), which is used to encode assumptions about the data-generating process, is a central object in the Judea Pearl Causal Inference framework. Unfortunately, the causal graph for a given problem is often unknown, subject to personal knowledge and bias, or loosely connected to the available data. The main objective of the task is to highlight the importance of the matter in a concrete way. Causal graphs are created here using increasing fractions of the data and compare with the ground truth graph. The comparison can be done with a Jaccard Similarity Index, measuring the intersection and union of the graph edges.

Common frameworks for causal inference include the causal pie model, Pearl’s structural causal model, structural equation modeling, and Rubin causal model, which are often used in areas such as social sciences and medical inferences. For this project after doing the necessary exploratory data analysis and transformations, and preparation of the data, we perform a causal inference task using Pearl’s framework and try to answer questions like:

- Given drivers are recommended to move 1km every 30 mins in a selected direction, what happens to the number of unfulfilled requests?

- If we assume we know the location of the next 20% of orders within 5km accuracy, what happens to the number of unfulfilled requests?

- Had we changed the time requirements to drivers operating time in the past, what fractions of orders could have been completed?

Finally, we Train ML models using XGboost and Random Forest using all variables and another using only the variables selected by the graph and measure how much each of the models over fit the non-target sets.

RESULTS

After applying notears algorithm to learn the structure, and removing edges below the threshold, the above subgraph was drawn. Then features were extracted for the ml algorithms based on casual inference as depicted below.

CHALLENGES

- During implementation of this project, understanding the basic idea behind casual inference and how it is different from correlation was the first challenge faced.

- Feature engineering to extract important additional features from the dataset was somehow innovative and time consuming task, and thus challenging.

- Another technical challenge faced was setting up MLFlow along with CML to catch mlrun artifacts of the XGBoost and Random Forest ML models.

CONCLUSION

After implementing the project, the following conclusions were drawn.

- from causal inference and graphs the Trip start and end time features were selected as predominant and they were used to perform three types of ml trainings.

- From ML, the Decision tree classifier yielded the highest accuracy

- Feature importance analysis again yielded the trip start time and end time as the most important features.

FUTURE WORK

In the future, the following improvements are proposed to make a better completion of this work. The first one is of course a decent visualization and presentation of insights from EDA and a public deployment that takes in some parameters and apply the models to perform logistic optimizations using the casual inferencing models. We also plan to add another model to train like logistic regression in order to check if we can get a better MSE and r2 errors and choose a better ML model.

REFERENCES: FURTHER READING

http://bayes.cs.ucla.edu/BOOK-2K/

https://arxiv.org/pdf/1803.01422.pdf

https://dl.acm.org/doi/pdf/10.1145/3397269

https://en.wikipedia.org/wiki/Causal_graph

https://www.youtube.com/watch?v=pEBI0vF45ic

https://www.shanelynn.ie/analysis-of-weather-data-using-pandas-python-and-seaborn/